如何用python爬取微博熱搜數據並保存

主要用到requests和bf4兩個庫

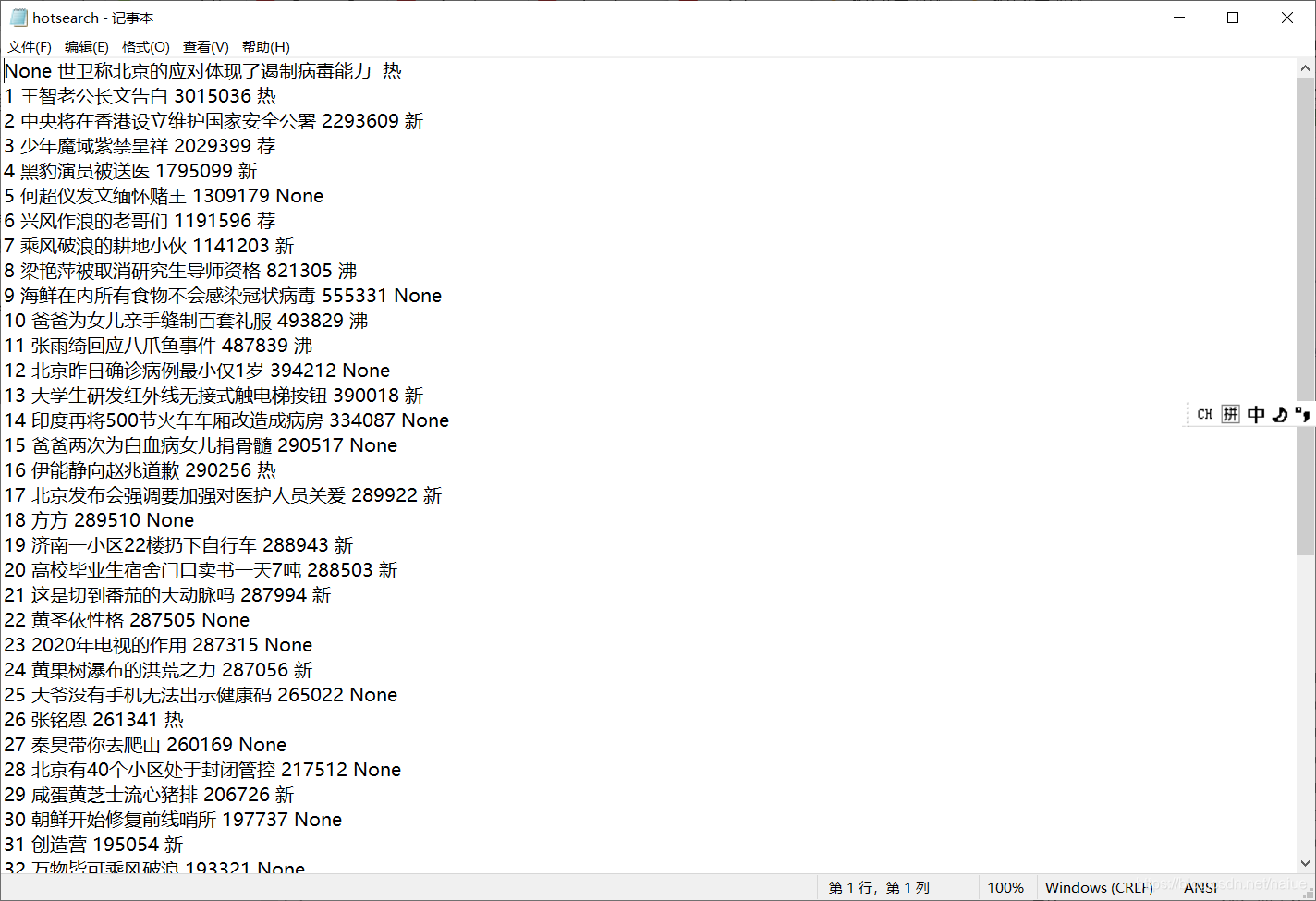

將獲得的信息保存在d://hotsearch.txt下

import requests;

import bs4

mylist=[]

r = requests.get(url='https://s.weibo.com/top/summary?Refer=top_hot&topnav=1&wvr=6',timeout=10)

print(r.status_code) # 獲取返回狀態

r.encoding=r.apparent_encoding

demo = r.text

from bs4 import BeautifulSoup

soup = BeautifulSoup(demo,"html.parser")

for link in soup.find('tbody') :

hotnumber=''

if isinstance(link,bs4.element.Tag):

# print(link('td'))

lis=link('td')

hotrank=lis[1]('a')[0].string#熱搜排名

hotname=lis[1].find('span')#熱搜名稱

if isinstance(hotname,bs4.element.Tag):

hotnumber=hotname.string#熱搜指數

pass

mylist.append([lis[0].string,hotrank,hotnumber,lis[2].string])

f=open("d://hotsearch.txt","w+")

for line in mylist:

f.write('%s %s %s %s\n'%(line[0],line[1],line[2],line[3]))

知識點擴展:利用python爬取微博熱搜並進行數據分析

爬取微博熱搜

import schedule

import pandas as pd

from datetime import datetime

import requests

from bs4 import BeautifulSoup

url = "https://s.weibo.com/top/summary?cate=realtimehot&sudaref=s.weibo.com&display=0&retcode=6102"

get_info_dict = {}

count = 0

def main():

global url, get_info_dict, count

get_info_list = []

print("正在爬取數據~~~")

html = requests.get(url).text

soup = BeautifulSoup(html, 'lxml')

for tr in soup.find_all(name='tr', class_=''):

get_info = get_info_dict.copy()

get_info['title'] = tr.find(class_='td-02').find(name='a').text

try:

get_info['num'] = eval(tr.find(class_='td-02').find(name='span').text)

except AttributeError:

get_info['num'] = None

get_info['time'] = datetime.now().strftime("%Y/%m/%d %H:%M")

get_info_list.append(get_info)

get_info_list = get_info_list[1:16]

df = pd.DataFrame(get_info_list)

if count == 0:

df.to_csv('datas.csv', mode='a+', index=False, encoding='gbk')

count += 1

else:

df.to_csv('datas.csv', mode='a+', index=False, header=False, encoding='gbk')

# 定時爬蟲

schedule.every(1).minutes.do(main)

while True:

schedule.run_pending()

pyecharts數據分析

import pandas as pd

from pyecharts import options as opts

from pyecharts.charts import Bar, Timeline, Grid

from pyecharts.globals import ThemeType, CurrentConfig

df = pd.read_csv('datas.csv', encoding='gbk')

print(df)

t = Timeline(init_opts=opts.InitOpts(theme=ThemeType.MACARONS)) # 定制主題

for i in range(int(df.shape[0]/15)):

bar = (

Bar()

.add_xaxis(list(df['title'][i*15: i*15+15][::-1])) # x軸數據

.add_yaxis('num', list(df['num'][i*15: i*15+15][::-1])) # y軸數據

.reversal_axis() # 翻轉

.set_global_opts( # 全局配置項

title_opts=opts.TitleOpts( # 標題配置項

title=f"{list(df['time'])[i * 15]}",

pos_right="5%", pos_bottom="15%",

title_textstyle_opts=opts.TextStyleOpts(

font_family='KaiTi', font_size=24, color='#FF1493'

)

),

xaxis_opts=opts.AxisOpts( # x軸配置項

splitline_opts=opts.SplitLineOpts(is_show=True),

),

yaxis_opts=opts.AxisOpts( # y軸配置項

splitline_opts=opts.SplitLineOpts(is_show=True),

axislabel_opts=opts.LabelOpts(color='#DC143C')

)

)

.set_series_opts( # 系列配置項

label_opts=opts.LabelOpts( # 標簽配置

position="right", color='#9400D3')

)

)

grid = (

Grid()

.add(bar, grid_opts=opts.GridOpts(pos_left="24%"))

)

t.add(grid, "")

t.add_schema(

play_interval=1000, # 輪播速度

is_timeline_show=False, # 是否顯示 timeline 組件

is_auto_play=True, # 是否自動播放

)

t.render('時間輪播圖.html')

到此這篇關於如何用python爬取微博熱搜數據並保存的文章就介紹到這瞭,更多相關python爬取微博熱搜數據內容請搜索WalkonNet以前的文章或繼續瀏覽下面的相關文章希望大傢以後多多支持WalkonNet!

推薦閱讀:

- Python爬蟲網頁元素定位術

- Python使用Beautiful Soup實現解析網頁

- python爬蟲學習筆記–BeautifulSoup4庫的使用詳解

- python爬蟲beautifulsoup庫使用操作教程全解(python爬蟲基礎入門)

- Python實現快速保存微信公眾號文章中的圖片