KubeSphere接入外部Elasticsearch實戰示例

引言

在安裝完成時候可以啟用日志組件,這樣會安裝 ES 組件並可以收集所有部署組件的日志,也可以收集審計日志,然後可以很方便的在 KubeSphere 平臺上進行日志查詢。

但是在實際使用過程中發現使用 KubeSphere 自身的 ES 會很重,而且官方也建議我們將日志接入到外部的 ES 中減輕 Kubernetes 的壓力。

以下為操作實戰。

前置步驟

ES 集群需支持 http 協議

測試環境 IP: 172.30.10.226,172.30.10.191,172.30.10.184

port: 9200

username: elastic

password: changeme

有三種常見的做法:

- 使用 nginx 做負載均衡;

- 單協調節點;

- 通過自定義 service 和 endpoints 負載均衡。

本文檔基於第三種負載均衡方案(通過 endpoints 負載)做對接。

備份 ks-installer

管理員賬號登錄 KubeSphere,在平臺管理 – 集群管理 – CRD 中搜索 clusterconfiguration,在自定義資源中,點擊 ks-installer 選擇編輯 YAML ,復制備份。

關閉內部 ES 對接外部 ES(如果未開啟日志則省略)

集群開啟瞭內部 Elasticsearch,會存在如下系統組件和日志接收器

容器日志、資源事件、審計日志(不開啟不會有日志接收器)

接收器地址為內部 Elasticsearch 地址:elasticsearch-logging-data.kubesphere-logging-system.svc:9200

關閉內部 ES 並卸載日志相關可插拔組件

執行命令編輯 ks-installer:

$ kubectl edit cc ks-installer -n kubesphere-system

ks-installer 參數的 logging.enabled 字段的值從 true 改為 false。

ks-installer 參數的 events.enabled 字段的值從 true 改為 false。

ks-installer 參數的 auditing.enabled 字段的值從 true 改為 false。

es:

enabledTime: 2022-08-16T10:33:18CST

status: enabled

events:

enabledTime: 2022-04-15T16:22:59CST

status: enabled

fluentbit:

enabledTime: 2022-04-15T16:19:46CST

status: enabled

logging:

enabledTime: 2022-04-15T16:22:59CST

status: enabled

執行命令檢查安裝過程:

$ kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

#日志出現以下內容說明重啟成功

Collecting installation results ...

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://172.30.9.xxx:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2022-08-04 15:53:14

#####################################################

執行命令卸載相關可插拔組件:

###### 卸載 KubeSphere 日志系統 $ kubectl delete inputs.logging.kubesphere.io -n kubesphere-logging-system tail ###### 卸載 KubeSphere 事件系統 $ helm delete ks-events -n kubesphere-logging-system ###### 卸載 KubeSphere 審計 $ helm uninstall kube-auditing -n kubesphere-logging-system $ kubectl delete crd rules.auditing.kubesphere.io $ kubectl delete crd webhooks.auditing.kubesphere.io ###### 卸載包括 Elasticsearch 的日志系統 $ kubectl delete crd fluentbitconfigs.logging.kubesphere.io $ kubectl delete crd fluentbits.logging.kubesphere.io $ kubectl delete crd inputs.logging.kubesphere.io $ kubectl delete crd outputs.logging.kubesphere.io $ kubectl delete crd parsers.logging.kubesphere.io $ kubectl delete deployments.apps -n kubesphere-logging-system fluentbit-operator $ helm uninstall elasticsearch-logging --namespace kubesphere-logging-system $ kubectl delete deployment logsidecar-injector-deploy -n kubesphere-logging-system $ kubectl delete ns kubesphere-logging-system

卸載過程中可能出現如下異常:

crd 資源刪除時出現問題,嘗試使用如下命令

$ kubectl patch crd/crd名稱 -p '{"metadata":{"finalizers":[]}}' --type=merge

創建 namespace

$ kubectl create ns kubesphere-logging-system

自定義 service 負載均衡 ES 節點

es-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: es-service

name: es-service

namespace: kubesphere-logging-system

spec:

ports:

- port: 9200

name: es

protocol: TCP

targetPort: 9200

es-endpoints.yaml

ip 地址修改為真實要對接的 ES 集群節點的 IP 地址。

apiVersion: v1

kind: Endpoints

metadata:

labels:

app: es-service

name: es-service

namespace: kubesphere-logging-system

subsets:

- addresses:

- ip: 172.30.10.***

- ip: 172.30.10.***

- ip: 172.30.10.***

ports:

- port: 9200

name: es

protocol: TCP

執行命令創建自定義 SVC:

$ kubectl apply -f es-service.yaml -n kubesphere-logging-system $ kubectl apply -f es-endpoints.yaml -n kubesphere-logging-system #查看svc $ kubectl get svc -n kubesphere-logging-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE es-service ClusterIP 109.233.8.178 <none> 9200/TCP 10d #查看endpoints $ kubectl get ep -n kubesphere-logging-system NAME ENDPOINTS AGE es-service 172.30.10.***:9200,172.30.10.***:9200,172.30.10.***:9200 10d

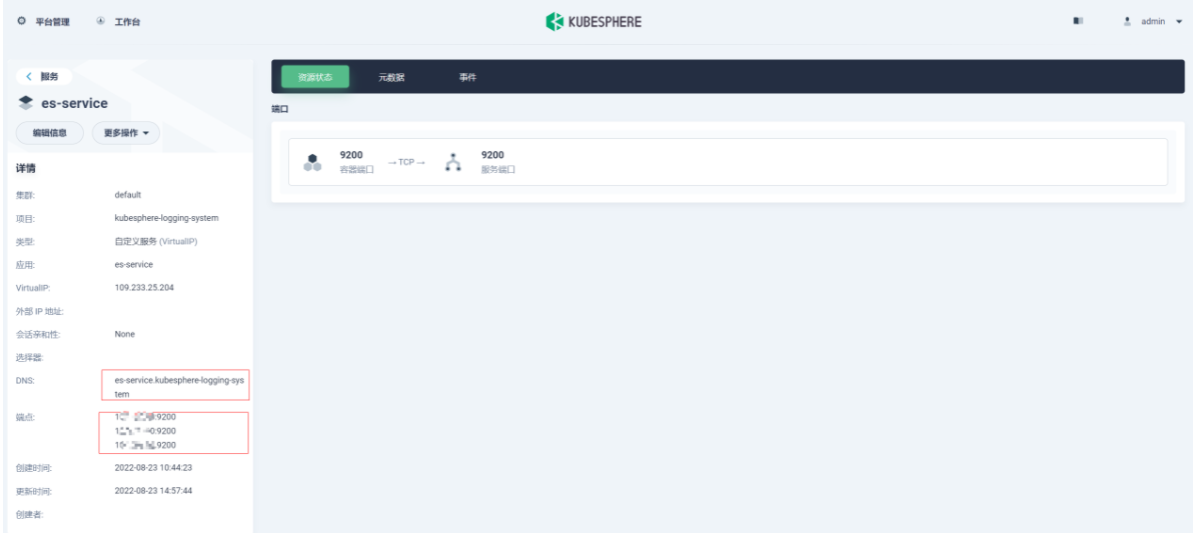

“平臺管理-集群管理-應用負載-服務”搜索 es-service。

es-service 服務地址:es-service.kubesphere-logging-system.svc。

開啟日志並對接外部 ES

在平臺管理 – 集群管理 – CRD 中搜索 clusterconfiguration,在自定義資源中,點擊 ks-installer,修改配置:

開啟容器日志、審計日志分別修改

logging.enabled: true

auditing.enabled: true

修改外部 ES 配置

es.basicAuth.enabled: true

es.basicAuth.password

es.basicAuth.username

es.externalElasticsearchUrl

es.externalElasticsearchPort

修改日志保存日期(默認 7 天)

logMaxAge

修改日志索引前綴(默認 logstash)

elkPrefix(開發:dev,測試:sit,生產:prod)

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

labels:

version: v3.2.1

name: ks-installer

namespace: kubesphere-system

spec:

alerting:

enabled: false

auditing:

enabled: true # false改為true

...

es:

basicAuth:

enabled: true # false改為true

password: '****' # 密碼

username: '****' # 用戶名

data:

volumeSize: 20Gi

elkPrefix: sit #開發:dev 測試:sit 生產:prod

externalElasticsearchPort: '9200' # 端口

externalElasticsearchUrl: es-service.kubesphere-logging-system.svc # 修改es-service

logMaxAge: 7 #默認7天即可

master:

volumeSize: 4Gi

...

執行命令檢查安裝過程

$ kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

#日志出現以下內容說明重啟成功

Collecting installation results ...

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://172.30.9.xxx:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2022-08-04 15:53:14

#####################################################

執行命令,查看對應的 ConfigMap 配置:

$ kubectl get configmap kubesphere-config -n kubesphere-system -o yaml

#重點如下,看es的配置是否已經生效,host是否為自定義SVC,以及用戶名密碼索引是否正確

logging:

host: http://es-service.kubesphere-logging-system.svc:9200

basicAuth: True

username: "****" #此處為你填寫的正確用戶名

password: "****" #此處為你填寫的正確密碼

indexPrefix: ks-sit-log #不同環境對應dev\sit\prod

auditing:

enable: true

webhookURL: https://kube-auditing-webhook-svc.kubesphere-logging-system.svc:6443/audit/webhook/event

host: http://es-service.kubesphere-logging-system.svc:9200

basicAuth: True

username: "****" #此處為你填寫的正確用戶名

password: "****" #此處為你填寫的正確密碼

indexPrefix: ks-sit-auditing #不同環境對應dev\sit\prod

執行命令編輯對應 output(如果已自動修改則不需要手動修改):

- 修改 host

- 修改索引 (開發、測試、生產前綴分別對應 ks-dev-、ks-sit-、ks-prod-)

#修改es的output

$ kubectl edit output es -n kubesphere-logging-system

#修改host 和 logstashPrefix

# host: es-service.kubesphere-logging-system.svc

# logstashPrefix: ks-對應環境-log

#如下:

spec:

es:

generateID: true

host: es-service.kubesphere-logging-system.svc # host地址

httpPassword:

valueFrom:

secretKeyRef:

key: password

name: elasticsearch-credentials

httpUser:

valueFrom:

secretKeyRef:

key: username

name: elasticsearch-credentials

logstashFormat: true

logstashPrefix: ks-sit-log # 修改此處為對應環境的日志索引

port: 9200

timeKey: '@timestamp'

matchRegex: '(?:kube|service)\.(.*)'

#修改es-auditing的output

$ kubectl edit output es-auditing -n kubesphere-logging-system

#修改host 和 logstashPrefix

# host: es-service.kubesphere-logging-system.svc

# logstashPrefix: ks-對應環境-auditing

#如下

spec:

es:

generateID: true

host: es-service.kubesphere-logging-system.svc # host地址

httpPassword:

valueFrom:

secretKeyRef:

key: password

name: elasticsearch-credentials

httpUser:

valueFrom:

secretKeyRef:

key: username

name: elasticsearch-credentials

logstashFormat: true

logstashPrefix: ks-sit-auditing # 修改此處為對應環境的日志索引

port: 9200

match: kube_auditing

重啟 ks-apiserver

$ kubectl rollout restart deployment ks-apiserver -n kubesphere-system

驗證

$ kubectl get po -n kubesphere-logging-system NAME READY STATUS RESTARTS AGE elasticsearch-logging-curator-elasticsearch-curator-276864h2xt2 0/1 Error 0 38h elasticsearch-logging-curator-elasticsearch-curator-276864wc6bs 0/1 Completed 0 38h elasticsearch-logging-curator-elasticsearch-curator-276879865wl 0/1 Completed 0 14h elasticsearch-logging-curator-elasticsearch-curator-276879l7xpf 0/1 Error 0 14h fluent-bit-4vzq5 1/1 Running 0 47h fluent-bit-6ckvm 1/1 Running 0 25h fluent-bit-6jt8d 1/1 Running 0 47h fluent-bit-88crg 1/1 Running 0 47h fluent-bit-9ps6z 1/1 Running 0 47h fluent-bit-djhtx 1/1 Running 0 47h fluent-bit-dmpfv 1/1 Running 0 47h fluent-bit-dtr7z 1/1 Running 0 47h fluent-bit-flxbt 1/1 Running 0 47h fluent-bit-fnxdk 1/1 Running 0 47h fluent-bit-gqbrl 1/1 Running 0 47h fluent-bit-kbzsj 1/1 Running 0 47h fluent-bit-lbnnh 1/1 Running 0 47h fluent-bit-nq4g8 1/1 Running 0 47h fluent-bit-q5shz 1/1 Running 0 47h fluent-bit-qrb7v 1/1 Running 0 47h fluent-bit-r26fk 1/1 Running 0 47h fluent-bit-rfrpd 1/1 Running 0 47h fluent-bit-s8869 1/1 Running 0 47h fluent-bit-sp5k4 1/1 Running 0 47h fluent-bit-vjvhl 1/1 Running 0 47h fluent-bit-xkksv 1/1 Running 0 47h fluent-bit-xrlz4 1/1 Running 0 47h fluentbit-operator-745bf5559f-vnz8w 1/1 Running 0 47h kube-auditing-operator-84857bf967-ftbjr 1/1 Running 0 47h kube-auditing-webhook-deploy-64cfb8c9f8-hf8g8 1/1 Running 0 47h kube-auditing-webhook-deploy-64cfb8c9f8-zf4rd 1/1 Running 0 47h logsidecar-injector-deploy-5fb6fdc6dd-fj5vm 2/2 Running 0 47h logsidecar-injector-deploy-5fb6fdc6dd-qbhdg 2/2 Running 0 47h

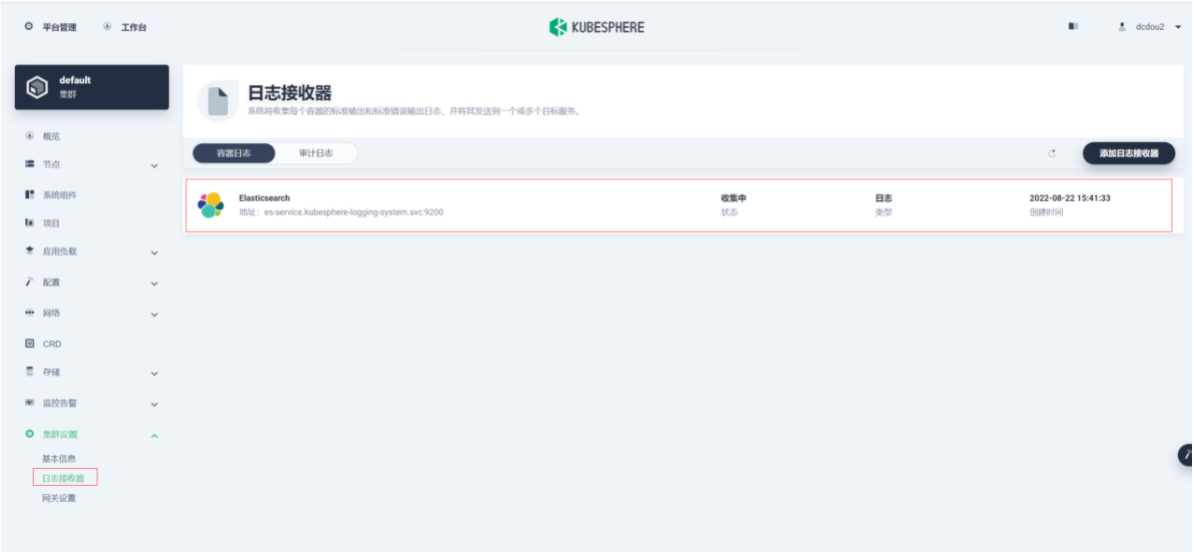

日志接收器:

查詢審計日志:

以上就是KubeSphere接入外部Elasticsearch實戰示例的詳細內容,更多關於KubeSphere接入Elasticsearch的資料請關註WalkonNet其它相關文章!

推薦閱讀:

- KubeSphere分級管理實踐及解析

- kubernetes集群搭建Zabbix監控平臺的詳細過程

- K8S 中 kubectl 命令詳解

- k8s中pod使用詳解(雲原生kubernetes)

- KubeSphere中部署Wiki系統wiki.js並啟用中文全文檢索