opencv+mediapipe實現人臉檢測及攝像頭實時示例

單張人臉關鍵點檢測

定義可視化圖像函數

導入三維人臉關鍵點檢測模型

導入可視化函數和可視化樣式

讀取圖像

將圖像模型輸入,獲取預測結果

BGR轉RGB

將RGB圖像輸入模型,獲取預測結果

預測人人臉個數

可視化人臉關鍵點檢測效果

繪制人來臉和重點區域輪廓線,返回annotated_image

繪制人臉輪廓、眼睫毛、眼眶、嘴唇

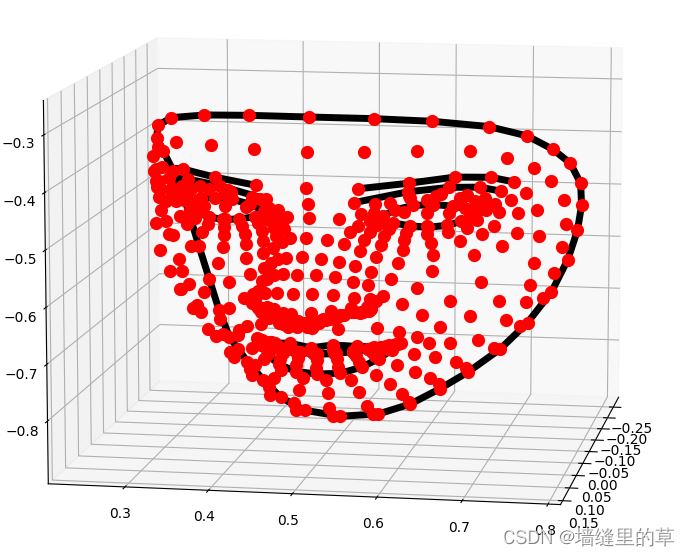

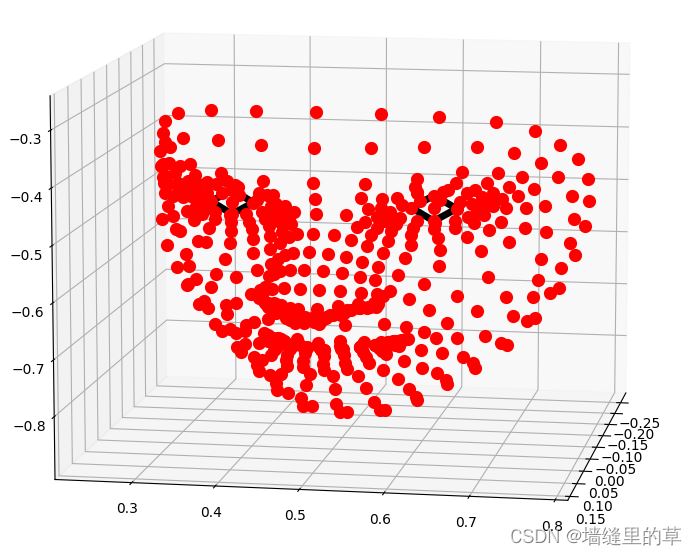

在三維坐標中分別可視化人臉網格、輪廓、瞳孔

import cv2 as cv

import mediapipe as mp

from tqdm import tqdm

import time

import matplotlib.pyplot as plt

# 定義可視化圖像函數

def look_img(img):

img_RGB=cv.cvtColor(img,cv.COLOR_BGR2RGB)

plt.imshow(img_RGB)

plt.show()

# 導入三維人臉關鍵點檢測模型

mp_face_mesh=mp.solutions.face_mesh

# help(mp_face_mesh.FaceMesh)

model=mp_face_mesh.FaceMesh(

static_image_mode=True,#TRUE:靜態圖片/False:攝像頭實時讀取

refine_landmarks=True,#使用Attention Mesh模型

min_detection_confidence=0.5, #置信度閾值,越接近1越準

min_tracking_confidence=0.5,#追蹤閾值

)

# 導入可視化函數和可視化樣式

mp_drawing=mp.solutions.drawing_utils

mp_drawing_styles=mp.solutions.drawing_styles

# 讀取圖像

img=cv.imread('img.png')

# look_img(img)

# 將圖像模型輸入,獲取預測結果

# BGR轉RGB

img_RGB=cv.cvtColor(img,cv.COLOR_BGR2RGB)

# 將RGB圖像輸入模型,獲取預測結果

results=model.process(img_RGB)

# 預測人人臉個數

len(results.multi_face_landmarks)

print(len(results.multi_face_landmarks))

# 結果:1

# 可視化人臉關鍵點檢測效果

# 繪制人來臉和重點區域輪廓線,返回annotated_image

annotated_image=img.copy()

if results.multi_face_landmarks: #如果檢測出人臉

for face_landmarks in results.multi_face_landmarks:#遍歷每一張臉

#繪制人臉網格

mp_drawing.draw_landmarks(

image=annotated_image,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_TESSELATION,

#landmark_drawing_spec為關鍵點可視化樣式,None為默認樣式(不顯示關鍵點)

# landmark_drawing_spec=mp_drawing_styles.DrawingSpec(thickness=1,circle_radius=2,color=[66,77,229]),

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles.get_default_face_mesh_tesselation_style()

)

#繪制人臉輪廓、眼睫毛、眼眶、嘴唇

mp_drawing.draw_landmarks(

image=annotated_image,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_CONTOURS,

# landmark_drawing_spec為關鍵點可視化樣式,None為默認樣式(不顯示關鍵點)

# landmark_drawing_spec=mp_drawing_styles.DrawingSpec(thickness=1,circle_radius=2,color=[66,77,229]),

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles.get_default_face_mesh_tesselation_style()

)

#繪制瞳孔區域

mp_drawing.draw_landmarks(

image=annotated_image,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_IRISES,

# landmark_drawing_spec為關鍵點可視化樣式,None為默認樣式(不顯示關鍵點)

landmark_drawing_spec=mp_drawing_styles.DrawingSpec(thickness=1,circle_radius=2,color=[128,256,229]),

# landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles.get_default_face_mesh_tesselation_style()

)

cv.imwrite('test.jpg',annotated_image)

look_img(annotated_image)

# 在三維坐標中分別可視化人臉網格、輪廓、瞳孔

mp_drawing.plot_landmarks(results.multi_face_landmarks[0],mp_face_mesh.FACEMESH_TESSELATION)

mp_drawing.plot_landmarks(results.multi_face_landmarks[0],mp_face_mesh.FACEMESH_CONTOURS)

mp_drawing.plot_landmarks(results.multi_face_landmarks[0],mp_face_mesh.FACEMESH_IRISES)

單張圖像人臉檢測

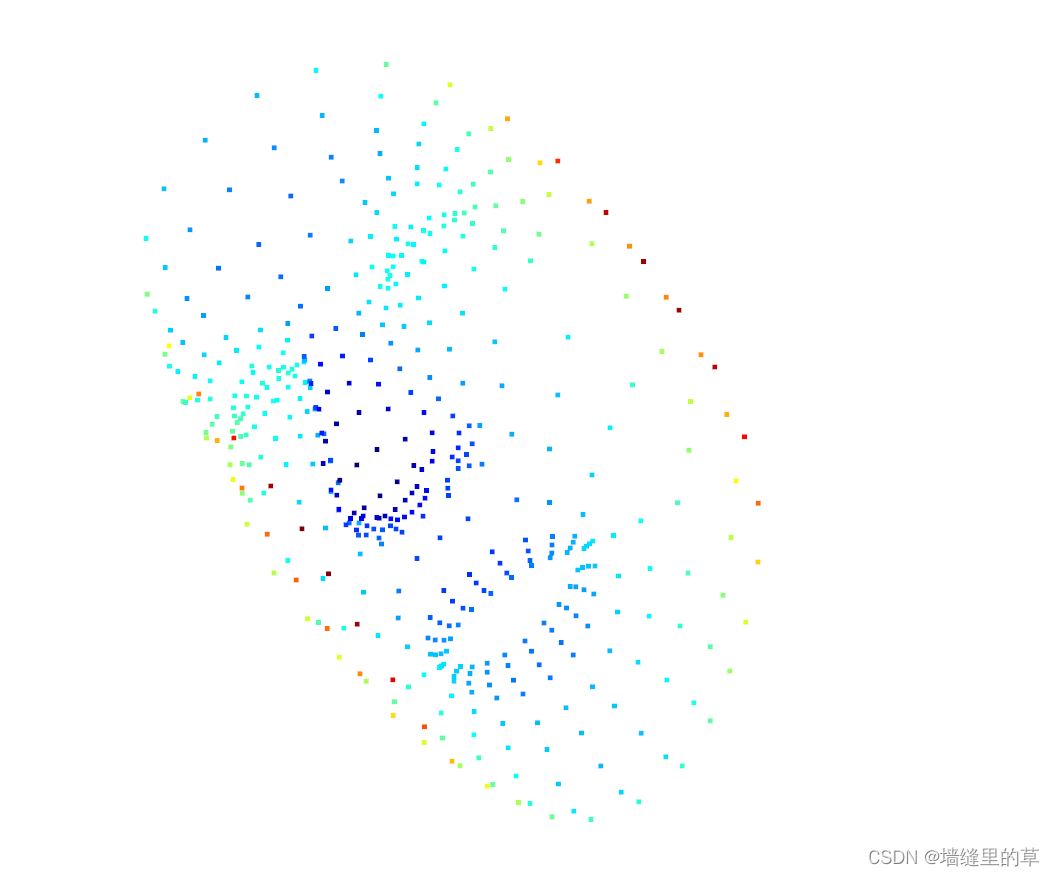

可以通過調用open3d實現3d模型建立,部分代碼與上面類似

import cv2 as cv

import mediapipe as mp

import numpy as np

from tqdm import tqdm

import time

import matplotlib.pyplot as plt

# 定義可視化圖像函數

def look_img(img):

img_RGB=cv.cvtColor(img,cv.COLOR_BGR2RGB)

plt.imshow(img_RGB)

plt.show()

# 導入三維人臉關鍵點檢測模型

mp_face_mesh=mp.solutions.face_mesh

# help(mp_face_mesh.FaceMesh)

model=mp_face_mesh.FaceMesh(

static_image_mode=True,#TRUE:靜態圖片/False:攝像頭實時讀取

refine_landmarks=True,#使用Attention Mesh模型

max_num_faces=40,

min_detection_confidence=0.2, #置信度閾值,越接近1越準

min_tracking_confidence=0.5,#追蹤閾值

)

# 導入可視化函數和可視化樣式

mp_drawing=mp.solutions.drawing_utils

# mp_drawing_styles=mp.solutions.drawing_styles

draw_spec=mp_drawing.DrawingSpec(thickness=2,circle_radius=1,color=[223,155,6])

# 讀取圖像

img=cv.imread('../人臉三維關鍵點檢測/dkx.jpg')

# width=img1.shape[1]

# height=img1.shape[0]

# img=cv.resize(img1,(width*10,height*10))

# look_img(img)

# 將圖像模型輸入,獲取預測結果

# BGR轉RGB

img_RGB=cv.cvtColor(img,cv.COLOR_BGR2RGB)

# 將RGB圖像輸入模型,獲取預測結果

results=model.process(img_RGB)

# # 預測人人臉個數

# len(results.multi_face_landmarks)

#

# print(len(results.multi_face_landmarks))

if results.multi_face_landmarks:

for face_landmarks in results.multi_face_landmarks:

mp_drawing.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_CONTOURS,

landmark_drawing_spec=draw_spec,

connection_drawing_spec=draw_spec

)

else:

print('未檢測出人臉')

look_img(img)

mp_drawing.plot_landmarks(results.multi_face_landmarks[0],mp_face_mesh.FACEMESH_TESSELATION)

mp_drawing.plot_landmarks(results.multi_face_landmarks[1],mp_face_mesh.FACEMESH_CONTOURS)

mp_drawing.plot_landmarks(results.multi_face_landmarks[1],mp_face_mesh.FACEMESH_IRISES)

# 交互式三維可視化

coords=np.array(results.multi_face_landmarks[0].landmark)

# print(len(coords))

# print(coords)

def get_x(each):

return each.x

def get_y(each):

return each.y

def get_z(each):

return each.z

# 分別獲取所有關鍵點的XYZ坐標

points_x=np.array(list(map(get_x,coords)))

points_y=np.array(list(map(get_y,coords)))

points_z=np.array(list(map(get_z,coords)))

# 將三個方向的坐標合並

points=np.vstack((points_x,points_y,points_z)).T

print(points.shape)

import open3d

point_cloud=open3d.geometry.PointCloud()

point_cloud.points=open3d.utility.Vector3dVector(points)

open3d.visualization.draw_geometries([point_cloud])

這是建立的3d的可視化模型,可以通過鼠標拖動將其旋轉

攝像頭實時關鍵點檢測

定義可視化圖像函數

導入三維人臉關鍵點檢測模型

導入可視化函數和可視化樣式

讀取單幀函數

主要代碼和上面的圖像類似

import cv2 as cv

import mediapipe as mp

from tqdm import tqdm

import time

import matplotlib.pyplot as plt

# 導入三維人臉關鍵點檢測模型

mp_face_mesh=mp.solutions.face_mesh

# help(mp_face_mesh.FaceMesh)

model=mp_face_mesh.FaceMesh(

static_image_mode=False,#TRUE:靜態圖片/False:攝像頭實時讀取

refine_landmarks=True,#使用Attention Mesh模型

max_num_faces=5,#最多檢測幾張人臉

min_detection_confidence=0.5, #置信度閾值,越接近1越準

min_tracking_confidence=0.5,#追蹤閾值

)

# 導入可視化函數和可視化樣式

mp_drawing=mp.solutions.drawing_utils

mp_drawing_styles=mp.solutions.drawing_styles

# 處理單幀的函數

def process_frame(img):

#記錄該幀處理的開始時間

start_time=time.time()

img_RGB=cv.cvtColor(img,cv.COLOR_BGR2RGB)

results=model.process(img_RGB)

if results.multi_face_landmarks:

for face_landmarks in results.multi_face_landmarks:

# mp_drawing.draw_detection(

# image=img,

# landmarks_list=face_landmarks,

# connections=mp_face_mesh.FACEMESH_TESSELATION,

# landmarks_drawing_spec=None,

# landmarks_drawing_spec=mp_drawing_styles.get_default_face_mesh_tesselation_style()

# )

# 繪制人臉網格

mp_drawing.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_TESSELATION,

# landmark_drawing_spec為關鍵點可視化樣式,None為默認樣式(不顯示關鍵點)

# landmark_drawing_spec=mp_drawing_styles.DrawingSpec(thickness=1,circle_radius=2,color=[66,77,229]),

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles.get_default_face_mesh_tesselation_style()

)

# 繪制人臉輪廓、眼睫毛、眼眶、嘴唇

mp_drawing.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_CONTOURS,

# landmark_drawing_spec為關鍵點可視化樣式,None為默認樣式(不顯示關鍵點)

# landmark_drawing_spec=mp_drawing_styles.DrawingSpec(thickness=1,circle_radius=2,color=[66,77,229]),

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles.get_default_face_mesh_tesselation_style()

)

# 繪制瞳孔區域

mp_drawing.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_IRISES,

# landmark_drawing_spec為關鍵點可視化樣式,None為默認樣式(不顯示關鍵點)

# landmark_drawing_spec=mp_drawing_styles.DrawingSpec(thickness=1, circle_radius=2, color=[0, 1, 128]),

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles.get_default_face_mesh_tesselation_style())

else:

img = cv.putText(img, 'NO FACE DELECTED', (25 , 50 ), cv.FONT_HERSHEY_SIMPLEX, 1.25,

(218, 112, 214), 1, 8)

#記錄該幀處理完畢的時間

end_time=time.time()

#計算每秒處理圖像的幀數FPS

FPS=1/(end_time-start_time)

scaler=1

img=cv.putText(img,'FPS'+str(int(FPS)),(25*scaler,100*scaler),cv.FONT_HERSHEY_SIMPLEX,1.25*scaler,(0,0,255),1,8)

return img

# 調用攝像頭

cap=cv.VideoCapture(0)

cap.open(0)

# 無限循環,直到break被觸發

while cap.isOpened():

success,frame=cap.read()

# if not success:

# print('ERROR')

# break

frame=process_frame(frame)

#展示處理後的三通道圖像

cv.imshow('my_window',frame)

if cv.waitKey(1) &0xff==ord('q'):

break

cap.release()

cv.destroyAllWindows()

到此這篇關於opencv+mediapipe實現人臉檢測及攝像頭實時的文章就介紹到這瞭,更多相關opencv 人臉檢測及攝像頭實時內容請搜索WalkonNet以前的文章或繼續瀏覽下面的相關文章希望大傢以後多多支持WalkonNet!

推薦閱讀:

- python+mediapipe+opencv實現手部關鍵點檢測功能(手勢識別)

- Python+OpenCV實戰之拖拽虛擬方塊的實現

- Python+OpenCV手勢檢測與識別Mediapipe基礎篇

- 基於Mediapipe+Opencv實現手勢檢測功能

- OpenCV+MediaPipe實現手部關鍵點識別