python政策網字體反爬實例(附完整代碼)

字體反爬,也是一種常見的反爬技術,這些網站采用瞭自定義的字體文件,在瀏覽器上正常顯示,但是爬蟲抓取下來的數據要麼就是亂碼,要麼就是變成其他字符。下面我們通過其中一種方式解決字體反爬。

1 字體反爬案例

來源網站:查策網_https://www.chacewang.com/chanye/news。

我們可以看到網站展示的時間日期與html中的時間日期不一致,每次刷新網頁,html中的時間都會變化,與實際不一致。

2 使用環境

python3.6 + windows 10專業版 + pycharm 2019.1.3專業版

3 安裝python第三方庫

pip3 install requests ==2.25.1

pip3 install fontTools ==4.28.5

4 查看woff文件

刷新網頁,在network中可以查看到一個woff文件,woff文件未字體加密文件,我們需要解析文件內容,得出加密字體與實際字體間的關系。

5 woff文件解決字體反爬全過程

5.1 調用第三方庫

import requests from fontTools.ttLib import TTFont

5.2 請求woff鏈接下載woff文件到本地

# 下載woff

url = "https://web.chace-ai.com/media/fonts/fFz9g4IQsHDyEaXI.woff?version=9.666110168223948"

headers = {

'accept':'*/*',

'accept-encoding':'gzip, deflate, br',

'accept-language':'zh-CN,zh;q=0.9',

'origin':'https://www.chacewang.com',

'referer':'https://www.chacewang.com/',

'user-agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.93 Safari/537.36',

}

resp = requests.get(url=url, headers=headers)

save_woff = 'demo.woff'

with open(save_woff, "wb") as f:

f.write(resp.content)

f.close()

5.3 查看woff文件內容,可以通過以下兩種方式

5.3.1 通過woff文件解析器,得到結果

5.3.2 將woff文件轉為xml文件,此操作也便於我們對字體反爬結果分析

# # woff轉xml文件 font = TTFont(save_woff) save_xml = 'demo.xml' font.saveXML(save_xml) # 轉換為xml文件

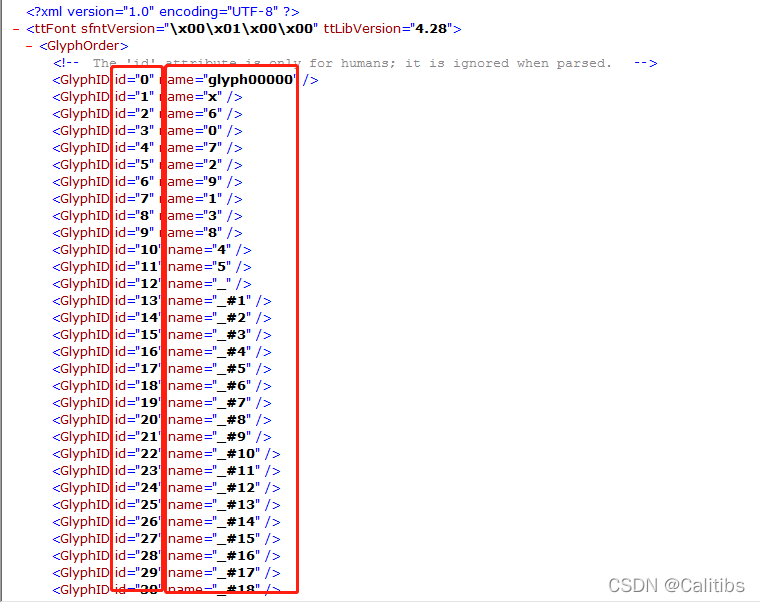

得到的xml文件如下:

一般來說,字體反爬是兩個方式表現:

形式一:GlyphID id與 GlyphID name形成對應關系。

形式二:通過TTGlyph name的xy坐標信息形成文字

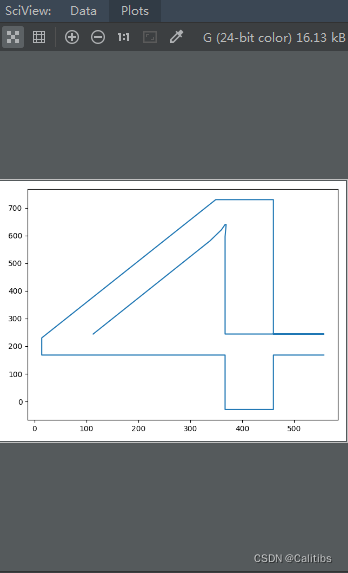

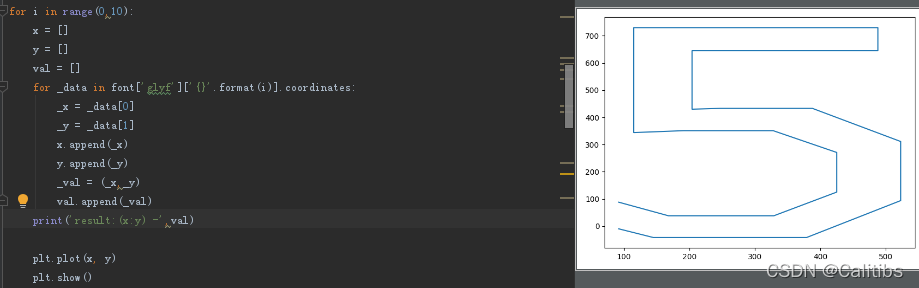

通過對比,我們發現形式一得出的結果明顯不是我們想要的,因此我們對形式二進行處理。 5.4 獲取所有坐標,通過matplotlib展示結果並對結果進行處理。

以此類推得出所有坐標的信息:

5.5 建立字體反爬後與圓字體間對應關系

# 字體反爬坐標信息

dict_data = {

'[(96, 74), (158, 38), (231, 38), (333, 38), (445, 198), (445, 346), (444, 345), (443, 346), (393, 251), (275, 251), (179, 251), (54, 382), (54, 487), (54, 599), (191, 742), (300, 742), (413, 742), (541, 565), (541, 395), (541, 185), (376, -42), (231, -42), (151, -42), (96, -16), (151, 500), (151, 423), (229, 334), (298, 334), (358, 334), (439, 415), (439, 474), (439, 555), (359, 663), (292, 663), (231, 663), (151, 570)]':'9',

'[(75, 98), (153, 38), (251, 38), (329, 38), (421, 115), (421, 179), (421, 323), (215, 323), (151, 323), (151, 403), (212, 403), (394, 403), (394, 538), (394, 663), (255, 663), (175, 663), (104, 609), (104, 699), (179, 742), (278, 742), (375, 742), (493, 641), (493, 560), (493, 411), (341, 369), (341, 367), (423, 358), (519, 260), (519, 186), (519, 84), (371, -42), (249, -42), (141, -42), (75, -1)]':'3',

'[(518, -29), (57, -29), (57, 54), (277, 274), (368, 365), (430, 472), (430, 528), (430, 593), (358, 662), (289, 662), (188, 662), (96, 576), (96, 673), (185, 742), (304, 742), (407, 742), (525, 632), (525, 538), (525, 468), (449, 332), (343, 227), (170, 58), (170, 56), (518, 56)]':'2',

'[(92, 88), (168, 38), (251, 38), (329, 38), (425, 125), (425, 271), (328, 351), (235, 351), (191, 351), (115, 344), (115, 730), (488, 730), (488, 645), (204, 645), (204, 430), (247, 433), (268, 433), (388, 433), (523, 311), (523, 205), (523, 94), (379, -42), (252, -42), (145, -42), (92, -10)]':'5',

'[(503, 632), (444, 663), (380, 663), (281, 663), (162, 491), (161, 343), (163, 343), (217, 447), (335, 447), (433, 447), (551, 319), (551, 213), (551, 101), (415, -42), (310, -42), (194, -42), (63, 139), (63, 305), (63, 506), (236, 742), (377, 742), (457, 742), (503, 720), (167, 219), (167, 144), (246, 38), (312, 38), (375, 38), (454, 130), (454, 202), (454, 281), (380, 368), (311, 368), (247, 368), (167, 280)]':'6',

'[(557, 168), (460, 168), (460, -29), (367, -29), (367, 168), (14, 168), (14, 230), (349, 730), (460, 730), (460, 244), (557, 244), (367, 244), (367, 562), (367, 596), (369, 640), (367, 640), (360, 621), (338, 581), (113, 244)]':'4',

'[(544, 696), (247, -29), (148, -29), (430, 645), (51, 645), (51, 730), (544, 730)]':'7',

'[(49, 335), (49, 536), (183, 742), (309, 742), (550, 742), (550, 354), (550, 162), (414, -42), (292, -42), (176, -42), (49, 151), (147, 340), (147, 38), (301, 38), (452, 38), (452, 345), (452, 663), (304, 663), (147, 663)]':'0',

'[(219, 370), (84, 433), (84, 553), (84, 637), (211, 742), (310, 742), (401, 742), (518, 644), (518, 565), (518, 439), (377, 371), (377, 369), (543, 309), (543, 165), (543, 70), (406, -42), (284, -42), (183, -42), (57, 69), (57, 157), (57, 302), (219, 368), (421, 554), (421, 605), (358, 664), (303, 664), (252, 664), (181, 602), (181, 555), (181, 460), (300, 410), (421, 461), (294, 325), (153, 268), (153, 162), (153, 107), (236, 37), (366, 37), (446, 107), (446, 159), (446, 269)]':'8',

'[(532, -29), (97, -29), (97, 54), (267, 54), (267, 630), (93, 579), (93, 668), (363, 746), (363, 54), (532, 54)]':'1',

}

# 對應實際字體信息

data_num_list = [9,3,2,5,6,4,7,0,8,1]

5.6 得到結果

結果一一對應,完美撒花

6 完整代碼如下

# -*- coding:utf-8 _*-

import os

import requests

from fontTools.ttLib import TTFont

import matplotlib.pyplot as plt

import re

# 下載woff

url = "https://web.chace-ai.com/media/fonts/fFz9g4IQsHDyEaXI.woff?version=9.666110168223948"

headers = {

'accept':'*/*',

'accept-encoding':'gzip, deflate, br',

'accept-language':'zh-CN,zh;q=0.9',

'origin':'https://www.chacewang.com',

'referer':'https://www.chacewang.com/',

'user-agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.93 Safari/537.36',

}

resp = requests.get(url=url, headers=headers)

save_woff = 'demo.woff'

with open(save_woff, "wb") as f:

f.write(resp.content)

f.close()

# # woff轉xml文件

font = TTFont(save_woff)

save_xml = 'demo.xml'

font.saveXML(save_xml) # 轉換為xml文件

# # 讀取woff文件

font = TTFont('demo.woff')

dict_data = {

'[(96, 74), (158, 38), (231, 38), (333, 38), (445, 198), (445, 346), (444, 345), (443, 346), (393, 251), (275, 251), (179, 251), (54, 382), (54, 487), (54, 599), (191, 742), (300, 742), (413, 742), (541, 565), (541, 395), (541, 185), (376, -42), (231, -42), (151, -42), (96, -16), (151, 500), (151, 423), (229, 334), (298, 334), (358, 334), (439, 415), (439, 474), (439, 555), (359, 663), (292, 663), (231, 663), (151, 570)]':'9',

'[(75, 98), (153, 38), (251, 38), (329, 38), (421, 115), (421, 179), (421, 323), (215, 323), (151, 323), (151, 403), (212, 403), (394, 403), (394, 538), (394, 663), (255, 663), (175, 663), (104, 609), (104, 699), (179, 742), (278, 742), (375, 742), (493, 641), (493, 560), (493, 411), (341, 369), (341, 367), (423, 358), (519, 260), (519, 186), (519, 84), (371, -42), (249, -42), (141, -42), (75, -1)]':'3',

'[(518, -29), (57, -29), (57, 54), (277, 274), (368, 365), (430, 472), (430, 528), (430, 593), (358, 662), (289, 662), (188, 662), (96, 576), (96, 673), (185, 742), (304, 742), (407, 742), (525, 632), (525, 538), (525, 468), (449, 332), (343, 227), (170, 58), (170, 56), (518, 56)]':'2',

'[(92, 88), (168, 38), (251, 38), (329, 38), (425, 125), (425, 271), (328, 351), (235, 351), (191, 351), (115, 344), (115, 730), (488, 730), (488, 645), (204, 645), (204, 430), (247, 433), (268, 433), (388, 433), (523, 311), (523, 205), (523, 94), (379, -42), (252, -42), (145, -42), (92, -10)]':'5',

'[(503, 632), (444, 663), (380, 663), (281, 663), (162, 491), (161, 343), (163, 343), (217, 447), (335, 447), (433, 447), (551, 319), (551, 213), (551, 101), (415, -42), (310, -42), (194, -42), (63, 139), (63, 305), (63, 506), (236, 742), (377, 742), (457, 742), (503, 720), (167, 219), (167, 144), (246, 38), (312, 38), (375, 38), (454, 130), (454, 202), (454, 281), (380, 368), (311, 368), (247, 368), (167, 280)]':'6',

'[(557, 168), (460, 168), (460, -29), (367, -29), (367, 168), (14, 168), (14, 230), (349, 730), (460, 730), (460, 244), (557, 244), (367, 244), (367, 562), (367, 596), (369, 640), (367, 640), (360, 621), (338, 581), (113, 244)]':'4',

'[(544, 696), (247, -29), (148, -29), (430, 645), (51, 645), (51, 730), (544, 730)]':'7',

'[(49, 335), (49, 536), (183, 742), (309, 742), (550, 742), (550, 354), (550, 162), (414, -42), (292, -42), (176, -42), (49, 151), (147, 340), (147, 38), (301, 38), (452, 38), (452, 345), (452, 663), (304, 663), (147, 663)]':'0',

'[(219, 370), (84, 433), (84, 553), (84, 637), (211, 742), (310, 742), (401, 742), (518, 644), (518, 565), (518, 439), (377, 371), (377, 369), (543, 309), (543, 165), (543, 70), (406, -42), (284, -42), (183, -42), (57, 69), (57, 157), (57, 302), (219, 368), (421, 554), (421, 605), (358, 664), (303, 664), (252, 664), (181, 602), (181, 555), (181, 460), (300, 410), (421, 461), (294, 325), (153, 268), (153, 162), (153, 107), (236, 37), (366, 37), (446, 107), (446, 159), (446, 269)]':'8',

'[(532, -29), (97, -29), (97, 54), (267, 54), (267, 630), (93, 579), (93, 668), (363, 746), (363, 54), (532, 54)]':'1',

}

data_num_list = [9,3,2,5,6,4,7,0,8,1]

result_list = []

for i in range(0,10):

x = []

y = []

val = []

for _data in font['glyf']['{}'.format(i)].coordinates:

_x = _data[0]

_y = _data[1]

x.append(_x)

y.append(_y)

_val = (_x,_y)

val.append(_val)

_result = dict_data.get(str(val))

result_list.append(_result)

# print(result_list)

_result = result_list[1:] + list(result_list[0])

print(_result)

# ps: 這是我針對此次字體反爬的解決方法,歡迎各位大佬給出意見或提問咨詢

總結

到此這篇關於python政策網字體反爬實例(附完整代碼)的文章就介紹到這瞭,更多相關python字體反爬內容請搜索WalkonNet以前的文章或繼續瀏覽下面的相關文章希望大傢以後多多支持WalkonNet!

推薦閱讀:

- python爬蟲破解字體加密案例詳解

- python起點網月票榜字體反爬案例

- Python爬蟲實例之2021貓眼票房字體加密反爬策略(粗略版)

- Python接單的過程記錄分享

- 深入淺析Python數據分析的過程記錄